| Flicker Free Animation |

|

|

| Introduction | Retrace Targetting | Triple Buffering | Infinite Buffering | Other Ideas |

| Introduction |

In dealing with real time animation in your application, if you've never dealt with it before, you will eventually end up running into the issue of flickering and tearing. These are effects that occur due to collisions between graphics memory updating and display monitor refreshing. Typically these problems occur because your application is updating the display graphics memory directly, without regard to monitor refresh. Depending on the method, objects may flicker in and out in a strobing manner, or may tear (cut into two halfs that appear to slightly offset from one another as the object moves.)

In what follows, I present what I have learned in my experience to be the best

solutions for this problem. It is assumed that you have some idea of how to

render graphics in a reasonable way (if you use PlotPixel on a regular basis,

this page is not for you.) I have no doubt that many "experts" will take

issue with some of my ideas. If you have any questions about this page don't

hesitate to mail me.

| Display Retrace Targetting |

The most typical practical solutions to the problem are centered around polling the target graphics hardware for when it just finishes a monitor refresh, then perform the graphics memory update. This works because there is an interval of time (called vertical blanking) when the monitor's scan gun has to literally reposition itself at the start of the display. During the vertical blanking stage none of the display is being refreshed, so modification of graphics memory will not cause any sort of flickering or tearing.

If you have direct access to the display hardware, an easy way to implement this is to use hardware double buffering. This is well known technique where you update a non-display portion of graphics memory, the back buffer, then repoint the graphics hardware's display memory to it at the start of vertical blank. Standard x86 PC VGA adapters have support for this sort of thing in their lower resolution graphics modes. One of the primary draw backs of this technique is that you are required to have twice the graphics memory of a single display associated to your graphics hardware. This is problematic for using the highest resolutions, or any resolutions if you cannot make assumptions about target user graphics hardware. VGA's have to, by definition, support at least 256K, as well as numerous display modes which consume no more than 64K for display.

while( !GameOver() ) {

Toggle(DrawContext.address);

DrawFrame(DrawContext);

while( !VerticalBlankStart( DisplayContext) ) {

/* empty */

}

SetStartAddress(DisplayContext, DrawContext.address);

}

|

As an alternative which solves the unknown memory configuation problem, you can use software double buffering. Here you draw directly to (typically) faster and more abundant system memory buffers, and literally copy the data to a fixed graphics display memory configuration at the start of vertical blank. It is assumed that the copying from the CPU out performs the scan gun retrace, which is reasonable (even a lowly Intel 486 can do it with a reasonable graphics card.) One problem here is that you take the extra performance hit of needing to copy an entire extra screen's worth of data per display frame. Another is that you cannot typically leverage graphics hardware asynchronous concurrent drawing features, commonly referred to as graphics acceleration to assist you in rendering your scenes.

Finally, if you can precisely time the monitor's scan line updating, and your

rendering algorithms draw in display scan line order, you can simply wait for

vertical retrace start before firing off your drawing algorithm (making

sure it never gets ahead of the monitor's retracing.) This technique is called

scanline chasing. This has the advantage of not requiring large extra

buffers, but has the disadvantage of being far less flexible, for your draw

algorithm implementation.

| Triple Buffering Display Retrace Targetting |

The big problem with the vertical blank polling methods described above is that they wait for the monitor scan gun to enter a known state before doing any useful work (typically taking up to a 60th or 75th of a second, of course!) Since animation, and game programs are typically required to perform as fast as possible, this is a highly undesirable bottleneck.

For now, lets just consider the situation of a realtime game.

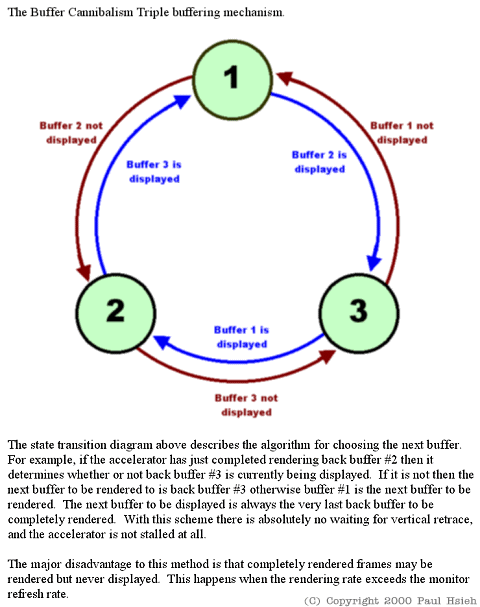

Working around this typically involves writing the buffer update event as a periodic interrupt service routine (ISR) so as to alleviate the application's main line threads to work independently from periodic display hardware synchronizations. The idea is to write a protocal between the ISR and the mainline thread to manage buffer updates which allow the mainline thread to get as much uninterrupted work done as possible. Roughly speaking: the ISR is set to be called at the start of vertical blank, then when the display triggers the ISR it checks a state maintained by the mainline thread which indicates the address of the newest completely rendered display buffer and the ISR updates display graphics memory if required, then the ISR updates a state which indicates what frames are obsolete. The mainline thread, at the start of the rendering phase, grabs an obsolete buffer and draws to it (this is where you spend the bulk of your graphics optimization effort) then when it is done, marks it as the newest frame (unmarking the previous "newest frame".)

Of course there is an issue here: How does the mainline thread grab an obsolete buffer without having to poll? The answer here is fairly straight forward. We know that the ISR will only display at most one buffer at a time, and the mainline thread will only mark at most one buffer as new. Thus while each task is in its working phase, from their perspectives, there are at most two non-obsolete (lets call them busy) buffers. Thus if there are three working graphics buffers, there will always be at least one free (obsolete) at any given time. This is the technique used in the popular PC video game DOOM.

while( !GameOver() ) {

GetObsoleteBuffer(DrawContext.index);

DrawFrame(DrawContext);

NewFrame.index = DrawContext.index;

}

...

GetObsoleteBuffer(&index) {

index = (NewFrame.index + 1) % 3;

if( DisplayContext.index == index ) {

index = (NewFrame.index + 2) % 3;

}

}

| |

/* Clearly there is no standard syntax for

this but I hope the meaning is clear. */

ISR( SignalOn( VerticalBlankStart( DisplayContext ) ) ) {

DisplayContext.index = NewFrame.index;

SetStartAddress(BackBufferAddress[DisplayContext.index]);

}

|

Implementation of this doesn't take too much imagination (1) maintain a structure with "newest", "current display" pointers/handles, or (2) assign all frames a "frame number", and maintain a "newest" and "current" frame number water mark. With the major bottleneck of waiting for periodic display refresh removed, you might even be able to use software triple buffering instead of hardware triple buffering (for low resolutions on a PC, this is desirable as it reduces hardware requirements, and thus potentially increases your potential market.) On an x86 PC, under DOS, the ISR itself should be called by a high resolution timer interrupt vector, whenever a vertical blank interval starts. On a Windows PC, Microsoft's Direct Draw API provides other mechanisms to do this which can leverage interrupts initiated by the graphics device itself, if your graphics device and drivers support this feature.

So what are the drawbacks of this method? Primarily the three buffer

requirement, and also the fact that it is designed around a display performance

optimization oriented algorithm. If it turns out that you can update the

display buffers in your main thread far faster than the ISR flips them (that is

to say you can render at greater than your monitor's refresh rate) then you will

end up over emphasizing graphics performance, when other bottlenecks may exist

in your application. It is also complicated to set up, since you need an ISR

triggered by a periodic hardware event which ordinarily requires both OS and

hardware support (kiss portability good bye.)

| Infinite Buffering |

The other important animation situtation, of course is video playback. That's when the frames are known and compressed in advanced and need to be decompressed and displayed in an exact sequence with an exact timing.

One of the problems here is that the video source is usually also perfectly synchronized with an audio track (the voices need to match the lip movements.) This is beyond the scope of this topic (I don't know much about what sort of tricks can be played on the audio, so its probably best I not say anything.)

However, in general, the video is compressed in a lossy compression format that can take a variable amount of time to decompress each frame. The usual way to improve overall tolerance of long frames is to have as many buffers as the system will practically allow and frames are decompressed into each buffer in a rotating ring as fast as possible. A seperate thread handles frame updates, chosing the buffers in oldest to youngest order. If there are no frames to available to be flipped to, then this is communicated to the decompression thread which then must employ a strategy to perform frame skipping.

Note that this technique is not ideal for using in realtime game rendering

situation as described above. Fundamentally, in realtime games, the input and

game world information is up to date and available only in the present and is

supposed to be rendered as quickly as possible. So decreasing latency by

throwing out intermediate steps/frames that are not representative of the most

up to date input/world information is desirable. However in video, all the

frames are known, or can be known in advance, and the output speed target which

is supposed to use all frames is also known and fixed.

| Other Ideas |

Ok, so triple (or infinite) buffering sounds great, but for one reason or another, you just can't use it. What else is there? The techniques described above center around doing updates which avoid the monitor retrace raster scan completely. But are there other ways of dealing with flickering and tearing?

Indeed, another approach is to minimize flickering and tearing, rather than removing it completely. The motivation here is to use more natural rendering algorithms, which are object, or sprite based instead of frame based, that may be more convenient for your application, but to implement them in such a way as to minimize flickering and tearing. To do this you have to understand what causes flickering and what causes tearing.

Flickering is caused by the fact that in updating a region of graphics memory you compose it as an overlapping series of layers (usually the background and the sprites that go on top.) Doing this while the display is refreshing the area introduces the possibility that it will occasionally and (given the cyclical nature of most real time computer applications) periodically refresh that area with background layers that are supposed to be occluded by layers on top of them. This background bleeding through almost like a stoboscope is what causes flickering.

To remove flickering, then you need to avoid the over drawing of pixels. If you have organized your graphics so that it is primarily just sprites on a static background, then when you update your sprites, you should do it in such a way, that you don't erase any old sprite pixels that will end up being replaced by new pixels from newly positioned/animated sprites. This is easier said than done, and to be honest is the most complicated algorithm (to do optimally) referred to on this entire page. But it is possible, and flickering is eliminated as a result.

Tearing occurs just when an object is being updated using a flickering removal algorithm such as above (or using a double/triple buffering algorithm but ignoring the monitor retrace), the monitor scan gun catches it as its being updated, and ends up refreshing the top half with new pixels and the bottom half with old pixels (or vice versa, depending on what direction you are updating your sprite/buffer.) The result is a horizontally sheared, or vertically stretched or compressed sprite image.

The only way I know of eliminating tearing without being aware of the retrace state of the monitor is to use image filtering (motion blurr) on your display buffer. This typically only works well for (mostly) horizontally moving scenes on natural images, such as motion picture plackback, and can be a large implementation/performance issue. Not surprisingly, this technique is not very widely applicable.

However, as an intrinsic benefit of using a flicker reducing sprite oriented algorithm, far fewer total pixels are drawn, and performance can increase substantially. That means that you can render sprite motion at much higher granularity. This will, in of itself, reduce tearing substantially, since torn sprites will be sheared/stretch/compressed by smaller motion differentials (ideally a single pixel), and thus be less noticable to the human eye.

The obvious drawbacks of this technique are that it does not completely remove the effects of tearing and it is not appropriate for real time graphics applications which animate all or most of the pixels on the screen (such as scrolling or the currently popular full motion 3D type of games.)

You should note that the flicker reducing algorithm referred to above is not the same as delta framing which only reduces the number of pixels updated without reducing the actual flickering (a stroboscope is still a stroboscope, no matter how fast you run it.) By buffering and clipping appropriately, though, obviously it would be possible to merge flicker reducing methods with delta framing.

However, there are considerable advantages to this algorithm over buffering/hardware retrace based algorithms. When applicable, the performace differences can be orders of magnitude (consider games like Pac Man, Break Out, Tetris, or other applications like menu driven gui's.) What's more, this sort of an algorithm can often be used in conjunction with buffering methods (its a great way to add a cursor to your 3D real time shoot 'em up.) By using such methods, you can often prototype and debug your rendering algorthms more quickly and easily than buffered rendering methods.

Updated 08/04/99

Copyright © 1997, 1998, 1999

Paul Hsieh All Rights Reserved.